Mid-December Review

How’s The Plan going?

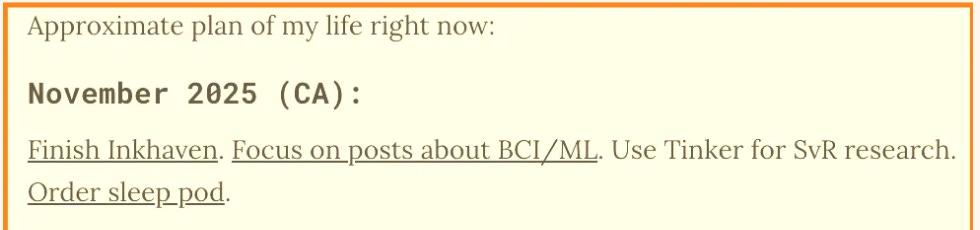

November 2025 (CA)

I finished Inkhaven

I need to de-duplicate some names on the Inkhaven Post Explorer; then I’ll RT it with updated all 1634 final posts :)

I am still reading Vishal Prasad, who is really very good. “Vibethinking as Bullshit” is a bone-chilling read (which I highly recommend).

I am so blessed to have been part of such a wholesome cohort as with Sean on object-level work as an expression of love, Claire on her love for the twins that she’s raising, Joanna on mutual support.

Daniel Reeves is really on top of research automation news—good reads in here—I hadn’t been keeping on top of AlphaEvolve, Google’s agent for coding and mathematics. I respect that he’s been publishing predictions since 2008. ‘Technological Richter scale’ is semi-delightful.

I saw Amanda at solstice; songs are on Spotify; between this folk-ish music and conscientious objection I’m starting to perceive the rationalist tradition within the broader humanist tradition. I don’t feel like I have (m)any unmet spiritual needs, which is nice.

I focused on posts about BCI / ML :)

why don’t we explicitly train models to be good at generalization?

Alignment is a predictability problem; whack-a-mole is a losing game, new backdoor types every day

I really enjoyed these two most recent ones — lit review / readlog-style. 2-3-day sprints / deep-dives on a topic are fun.

I did SvR research, and I used Tinker, but I didn’t combine the two.

I don’t think I’ll double down on ‘closing the SvR gap’. I’ve moved away from thinking in terms of values—lossy abstractions that these are—and am these days thinking more in terms of scenarios, and how a model generalizes from approved actions in various scenarios, and how an overseer gives additional context / corrections / feedback…

I ordered sleep pod :)) We’ll defeat the pigeonhole principle one import at a time. It’s sailing under the flag of Hong Kong!

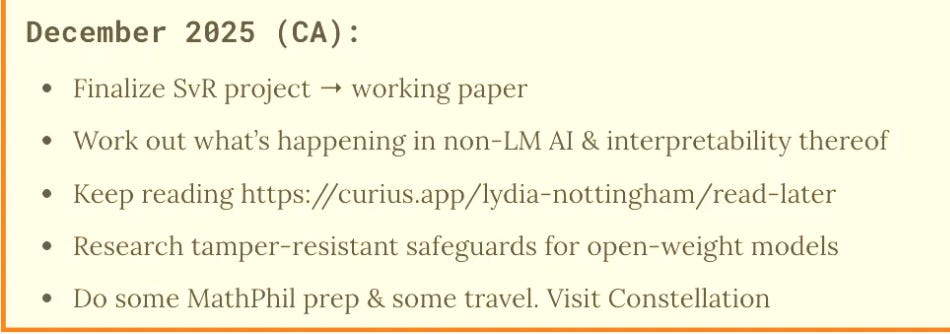

December 2025 (CA)

I can focus on SvR ~1 day/week.

I’m writing up a research proposal on testing models’ self-forecasting abilities. These are projects that I can work on 1-2 days/week.

Sean’s marvelous post on ‘the object-level career’ feels extremely, extremely relevant today. I am noticing that I really want to focus on making AI more predictable, and then there are 1001 epiphenomenal distractions that offer resources to do that.

The OpenAI Residency exists, but I’d have to bid for another rustication or (let’s be real, more likely) drop out. Visa situation favors a U.S.-consistent setup. But frankly, do residencies make sense when I’m not looking / applying for jobs? I feel like there’s a Dutch-booking thing going on here, where I prefer finishing my MathPhil degree to taking a job at an org like this, but still somehow consider applying these residencies, whose entire purpose is generally translating to jobs at these orgs.

The Anthropic Fellows Program exists, with the same kinds of parameters, but for that a July 2026 start would mean I could at least spend January-July studying.

I am doing enough side projects that I could probably submit one for Neel Nanda’s task, but I know I wouldn’t have time for exploration phase alongside my studies, so I’m going to make a firm decision to say no! on this (taking mnestics).

More than anything, I want to define first the work I’m going to do in 2026, and then pick research programs that are compatible with this. I think the work involves identifying and addressing sources of unpredictability in neural networks (their downstream behavior, long-horizon agentic rollouts, etc.). When the world is predictable, the world is safe.

I realized that wanting to work out what’s going on with ‘non-LM AI’ was the Delmore Effect striking again. It’s everywhere—you can’t be too careful. People always want to carve out some research niche for themselves, but it’s a fallacy to do this in a low-priority area. Choose your contrarianism on the right level of abstraction. Keep going deep, deep into the ‘obvious, high-priority’ things; treat popularity as an independent / orthogonal variable that doesn’t affect your own prioritization for better or worse, and the contrarianism will take care of itself—promise.

Why would you condition on something like ‘unpopular architectures’ / limit yourself on this particular axis?

I’ve stopped conceptualizing of AI so much in terms of ‘LMs’ and am mostly just thinking in terms of ‘neural networks’ & related scaffolding now.

I do still want to read the SSM and HRM papers. Soon :)

I can…hit my reading lists in the evenings?

I do not know how to balance this with my studies. I think I need to set a rule for myself like “clock up 6 hours of Oxf MathPhil work, then the rest of the day is your own, (ideally cultivating connections between the MathPhil and the external content).

On the plus side, these links are much more appealing to me than socializing for the most part. I am in the market for a few more recurring weekly / fortnightly / monthly 1-1s & then I really want to not think about this too much. I work from Mox and The Commons & I live between Conduit & Ark, so I see lots of humans. :)

I can focus on open-weight model project 1 day/week?

I think I’ve just been distracted by SvR project finishing up. But I do want to dedicate 1 day/week to this project.

There go my weekends

This feels like something that can slide if something has to.

I’m going to start Mon-Fri working-hour MathPhil

Kicking off as soon as I submit the self-forecasting proposal

I visited Constellation. It was broadly unremarkable.

I really enjoyed chatting with people from Theorem — feels plausible I’ll do a research startup at some point — & Dillon Plunkett is a computational functionalist, and I wrote treatises on backdoors but didn’t actually re-chat to Owain Evans et al.. But this was intellectually productive.

Open-source OODA

I need to be realistic about what I can achieve with the time that I have.

I’ll fall back into applying-to-stuff mode in early January, not before. Remember, artifact / publication momentum transfers into other kinds of momentum, but application momentum fizzles out, is BS.

It’s kind of a relief to not be applying to things. I like my life best when I’m not. I am going to run out of money at some point on this strat. But I think it’s really important for me to be focusing on actual work right now. So, no-application-December (or like, 15-min event forms only).

One principle I live by: give yourself less to process. Having things to process left right and center is what disturbs momentum most. So streamline your life; reduce the number of variables. That’s why I’m so happy to have canceled Guatemala. It’s damn good to be living at home in SF. I wouldn’t want to spend the winter anywhere else.

Christmas in San Francisco, alone. Last five years—Hong Kong (alone), Hong Kong (with kind Renata, college admissions stress), CDMX (on FTX dime, beautiful Ángel de la Independencia)), UK (I remember why I left this life behind; I wish it weren’t this way), Temecula (with the in-laws). Will I ever crack this season? Stay tuned :)

Edit 30/12: Worked it out. Host for your friends :) Much love and appreciation to those who gave activation energy, co-hosts, & people who came!

hmm now I want to do one as well. Good update.

How do you find the time to read soany papers and how do you self-evaluate if you have absorbed the paper enough (surely the pareto efficiency lies somewhere in between reading everything implementing nothing and reading little and implementing everything)

Hey, great read as always. Your discussion on explicitly training models for generalization is quite timely; I often think about how diverse movement patterns in Pilates contribute to a more universally adaptable physical form. Do you anticipate future AI architechtures will intrinsically prioritize this broader adaptability?